An LLM CodeForces champion is not taking your software engineering job (yet)

A naïve view of the eval results could lead you to overestimate the current state of AI capability

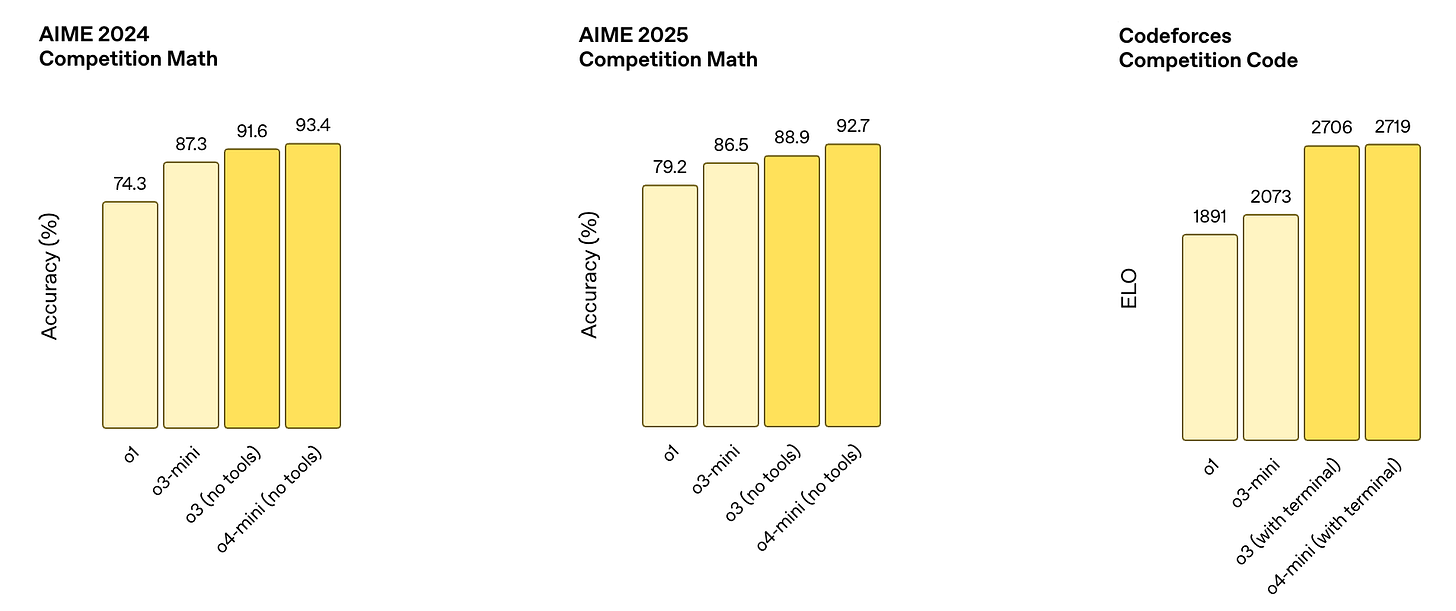

With an Elo rating of 2706, OpenAI’s o3 model is better than 99.7% of competitive programmers on CodeForces (based on this rating distribution data from 2024). Humans with this performance would excel at any top tech company, whereas o3 is not ready to automate even the median big-tech engineer’s job.

Competitive-programming ability is a much worse predictor of general software-engineering ability in LLMs compared to in humans. If someone does well on a LeetCode interview, you can conclude that they have some amount of general intelligence that will transfer to open-ended software-engineering ability (as well as enough conscientiousness to prepare for the interview). The same is not true of LLMs—because they can use shallower and less generalizable methods, their ability to solve toy algorithms problems tells you much less about their ability to do real-world engineering tasks. Furthermore, AI models may be good at software engineering but perform comparatively poorly in other cognitive domains like long-term planning, big-picture thinking, prose composition, or spatial reasoning.

At a high level there are two reasons that a naïve view of the eval results could lead someone to overestimate the current state of AI capability:

AI models can solve the same tasks as humans using different methods that require less general intelligence. Modern LLMs can memorize many more facts and narrow heuristics than any humans, making shallow pattern-matching strategies much more viable.

Different dimensions of general intelligence are much more correlated in humans compared to AI models. Human cognitive profiles are generally much smoother than AI models’. If someone is very good at math, they are probably also better than average at writing prose and visualizing shapes. On the other hand, an LLM’s math and writing ability can diverge enormously, or even more so its spatial reasoning and math ability.

By (2) I am not denying that performance on different evals is highly correlated in models (and highly correlated with training FLOPs) (thanks to Stefan Schubert for sharing this paper). To conclude that dimensions of intelligence are correlated, it’s not enough to observe that test subscores are correlated. An additional premise is required: that the subscores actually track dimensions of intelligence. If it turned out that people were often scoring very high on tests but failing at tasks in real life (for example because the tests kept leaking and people would memorize the answers), I’d stop caring whether the tests are correlated.

AI models’ failures to generalize despite impressive eval results are discussed by Ege Erdil during the Dwarkesh podcast with Ege Erdil and Tamay Besiroglu.

We still are very far, it seems like, from an AI model that can take a generic game off Steam. Let’s say you just download a game released this year. You don’t know how to play this game. And then you just have to play it. And then most games are actually not that difficult for a human.

On how performances impressive for a human tell us less about AI models’ capability to do real-world tasks:

So if you look in absolute terms at what are the skills you need to actually automate the process of being a researcher, then what fraction of those skills do the AI systems actually have? Even in coding, a lot of coding is, you have a very large code base you have to work with, the instructions are very kind of vague. For example you mentioned METR eval, in which, because they needed to make it an eval, all the tasks have to be compact and closed and have clear evaluation metrics: “here’s a model, get its loss on this data set as low as possible”. Or “here’s another model and its embedding matrix has been scrambled, just fix it to recover like most of its original performance”, etc.

Those are not problems that you actually work on in AI R&D. They’re very artificial problems. Now, if a human was good at doing those problems, you would infer, I think logically, that that human is likely to actually be a good researcher. But if an AI is able to do them, the AI lacks so many other competences that a human would have—not just the researcher, just an ordinary human—that we don’t think about in the process of research. So our view would be that automating research is, first of all, more difficult than people give it credit for. I think you need more skills to do it and definitely more than models are displaying right now.

On reasoning models not requiring as much creativity to solve problems:

…if you actually look at the way they approach problems, the reason what they do looks impressive to us is because we have so much less knowledge. And the model is approaching the problems in a fundamentally different way compared to how a human would. […] you’d ask it some obscure math question where you need some specific theorem from 1850 or something, and then it would just know that, if it’s a large model. So that makes the difficulty profile very different. And if you look at the way they approach problems, the reasoning models, they are usually not creative. They are very effectively able to leverage the knowledge they have, which is extremely vast. And that makes them very effective in a bunch of ways. But you might ask the question, has a reasoning model ever come up with a math concept that even seems slightly interesting to a human mathematician? And I’ve never seen that.